Modernization of Legacy Check Systems

Financial institutions and their vendors are taking the necessary steps to modernizing legacy check systems to meet the needs of their business and their customers. There are still many financial institutions processing on platforms developed 20 years ago!

Although electronic payments may grab the spotlight as the future of payments, significant improvements are delivered every day to facilitate platform modernization in check processing and fraud prevention improving teller, branch, mobile, RDC, archive, lockbox, and ATM image capture.

The use of AI, machine learning, and deep learning-based systems are well-positioned to providing stronger check processing automation, payment negotiability testing, fraud detection, compliance, and analysis of consumer and corporate behavior, thus justifying continued investment.

Legacy Check Recognition Limitations

Today, legacy check recognition systems deployed in the market today primarily rely on the following technologies:

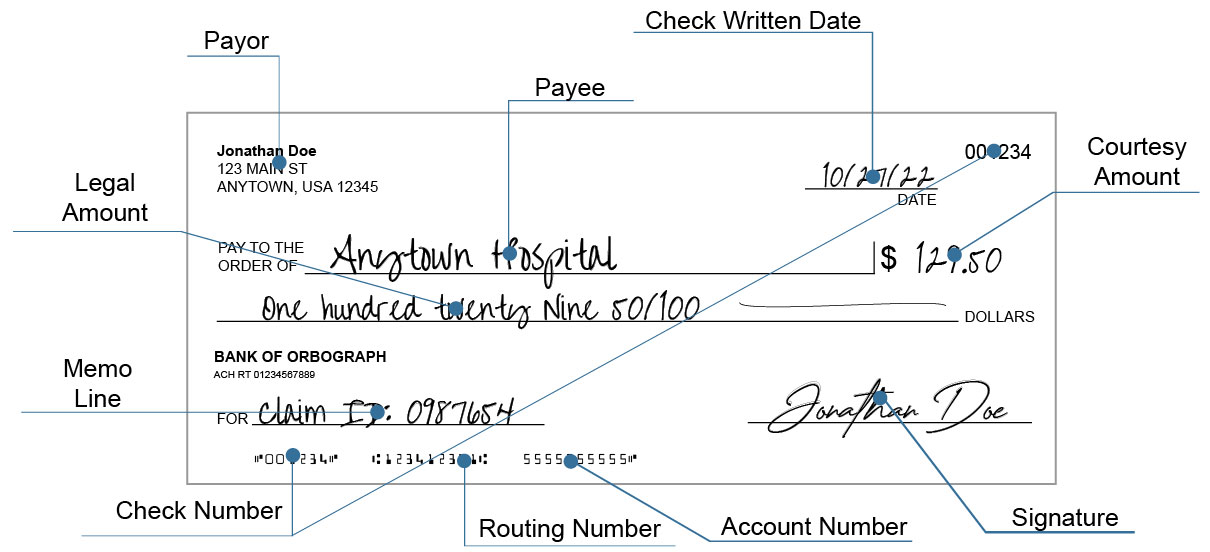

- Courtesy amount recognition (CAR): using traditional courtesy amount field algorithms

- Legal amount recognition (LAR): relying on traditional OCR engines and toolkits

- Optical character recognition (OCR): although OCR is strong on preprinted fields, field location on business checks is difficult

- Intelligent character recognition (ICR): recognition of handwritten fields

- Magnetic Ink Character Recognition (MICR): a combination of MICR reading combined with OCR still can yield 1-2% reject rates

- Intelligent word recognition (IWR): legal field or payee on checks can be applicable, but again, field location makes for a challenging environment

In 2025, many field installations are only delivering CAR/LAR performance levels from 75% to 85%, with error rates approaching 2-4%.

Check Recognition: AI-based Deep Learning Models

New AI technology works differently than traditional CAR/LAR, OCR and ICR. Using AI terminology, the Anywhere Recognition process is described as follows:

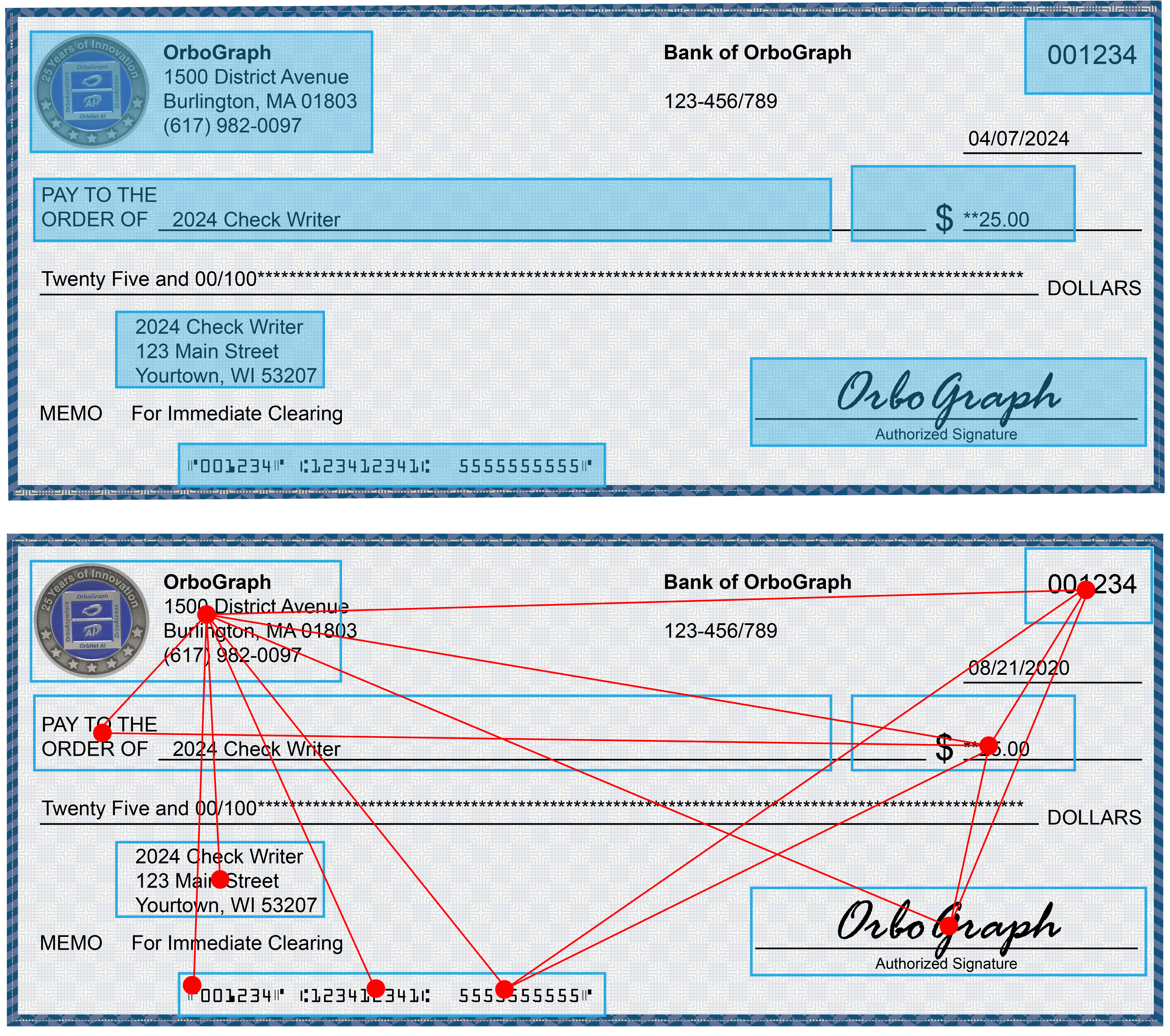

Field Detection

Field detection is a sophisticated process that leverages deep learning models to accurately identify and locate specific fields on checks or internal documents. This technique is a subset of object detection, which encompasses a broader range of applications beyond document analysis. By employing neural networks trained on extensive datasets, these models can recognize patterns and features that distinguish relevant fields, such as the date, amount, or signature. Once the model identifies a field, it locks onto the precise coordinates, allowing for subsequent data extraction, validation, and processing.

Text Classification

Text classification is a pivotal aspect of natural language processing (NLP) that leverages deep learning models to discern and categorize specific characteristics within document fields. It involves training algorithms to understand and assign labels to various text segments based on their content. For instance, in financial documentation, a model can be trained to identify and extract the value associated with the amount field, which is crucial for automating processes such as invoice processing or check verification. By employing advanced techniques like recurrent neural networks (RNNs) or transformer architectures, organizations can significantly enhance the accuracy and efficiency of textual data analysis.

The process of conducting text classification on check images is known as inference. This phase occurs after the model has been trained and involves deploying the model in a production environment to interpret and classify new data entries. Inference allows organizations to automate the tedious task of sifting through vast amounts of textual information, thereby streamlining workflows and minimizing human error. As these deep learning models continue to evolve, they not only improve the precision of text classification but also expand the potential applications across various industries, facilitating smarter, data-driven decision-making.

Interpretation

Interpretation plays a pivotal role in translating raw scores into actionable insights. When evaluating outputs, the derived values and associated scores signify probabilities of success, enabling stakeholders to assess potential outcomes effectively. Each score serves as a quantitative measure of confidence in a specific decision or action. However, the power of these interpretations is significantly augmented when the scores are normalized through decision-tree models. This normalization process ensures that the scores are standardized, facilitating a more consistent and reliable comparison across various scenarios and datasets.