GPUs vs. CPUs: Understanding Why GPUs are Superior to CPUs for Machine Learning

Last week, we took a deep dive into a video by Mythbusters on CPU vs GPU, detailing how GPU provides a higher rate of speed and accuracy than CPU, essential for check processing and fraud detection. But, why are GPU processors necessary for artificial intelligence and machine learning?

GPUs are the "Heavy Lifters"

The tech industry has been touting CPUs (central processing units) -- which have been doing the heavy lifting for years -- but the new "extra-heavy lifter" is the GPU.

Jason Dsouza of Towards Data Science offers an effective metaphor:

If you consider a CPU as a Maserati, a GPU can be considered as a big truck.

The CPU (Maserati) can fetch small amounts of packages (3 -4 passengers) in the RAM quickly whereas a GPU (the truck) is slower but can fetch large amounts of memory (~20 passengers) in one turn.

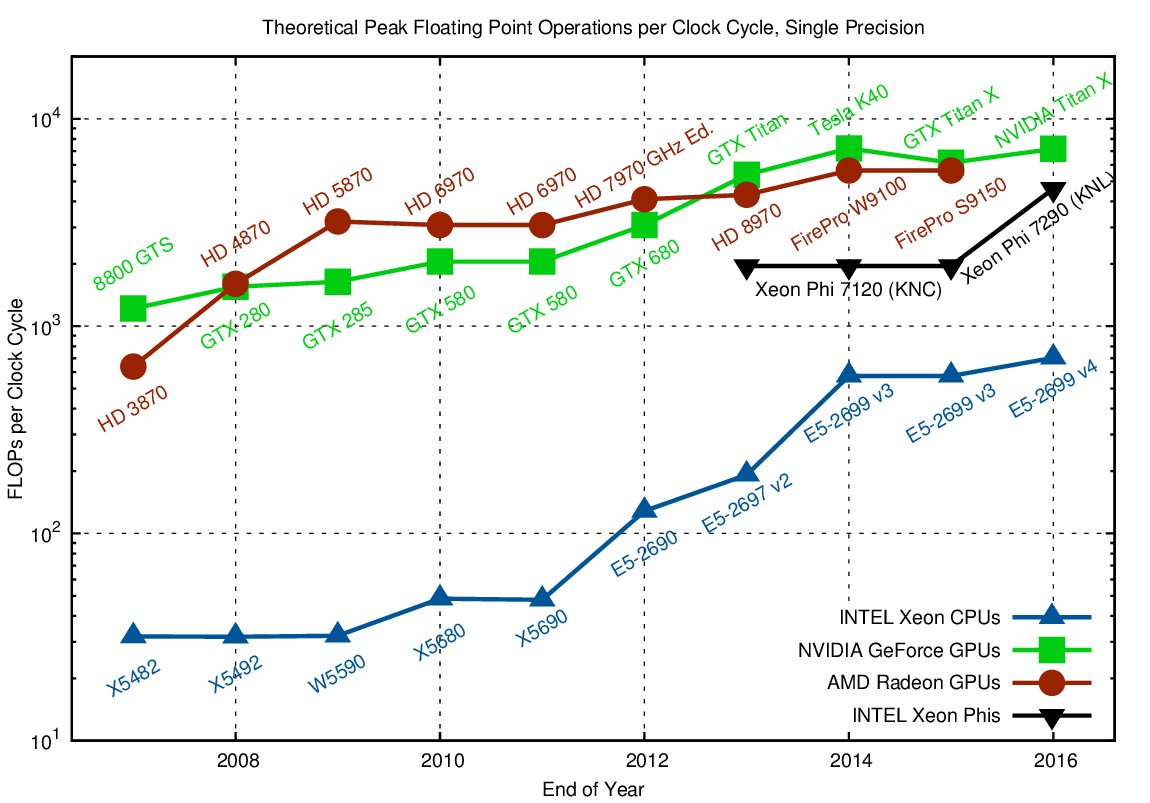

With that visual in mind, let's take a look look at the speed of GPU proccessors vs. CPU processors when handling these large amounts of computations. In the chart below, you can that the AMD Radeon GPU and Nvidia GeForce GPU clearly perform better in terms of speed vs the INTEL Xeon CPU:

In addition to GPU's superiority of processing speed, we need to also take a look at the memory bandwidth. As you may or may not know, GPUs come with dedicated VRAM (video RAM) memory that can be direct to solely handle the computations, while CPUs partition its memory to handle other tasks at the same time. The chart below shows how, with the technology continuously improving, GPUs are the better choice for artificial intelligence and machine learning as they can handle more at faster speeds.

Why are Speed and Memory Important for Artificial Intelligence and Machine Learning

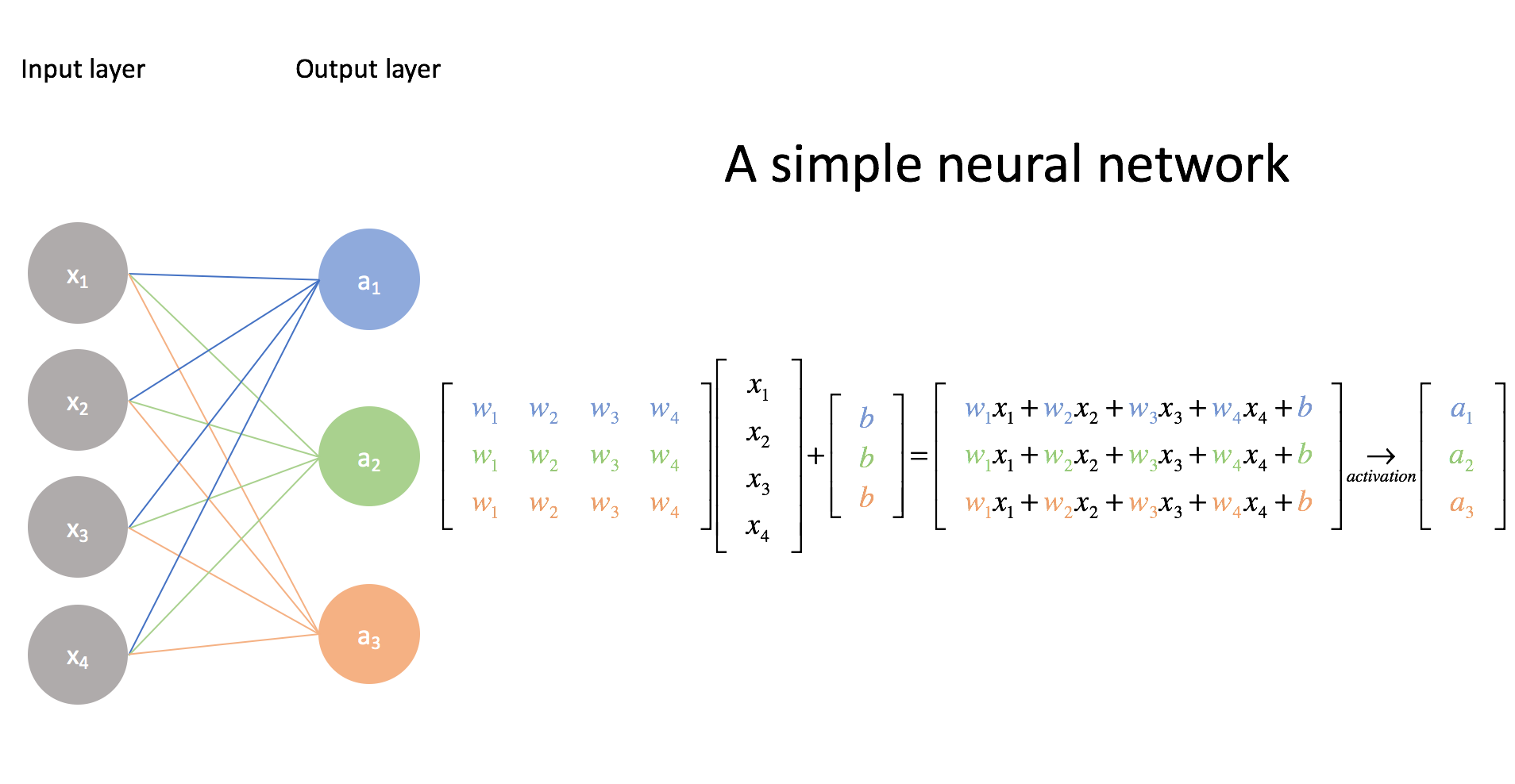

Let's take a look at a simple matrix of a neural network provided by towards data science:

Source: jeremyjordan.me

Within the matrix, there are hidden layers where the input is being processed using what are called "weights," and spitting out a best prediction as an output. For check processing, this means that it's being compared to other images of checks that have been trained into the model.

So what happens when you have a large amount of items to process against millions of images of check or weights?

If your neural network has around 10, 100 or even 100,000 parameters. A computer would still be able to handle this in a matter of minutes, or even hours at the most.

But what if your neural network has more than 10 billion parameters? It would take years to train this kind of systems employing the traditional approach. Your computer would probably give up before you’re even one-tenth of the way.

A neural network that takes search input and predicts from 100 million outputs, or products, will typically end up with about 2,000 parameters per product. So you multiply those, and the final layer of the neural network is now 200 billion parameters. And I have not done anything sophisticated. I’m talking about a very, very dead simple neural network model. — a Ph.D. student at Rice University.

Why GPUs Are Needed for Real-Time Check Processing

We've recently detailed why GPUs are important for banking & check processing. However, we feel the following conclusion from towards data science really hits the proverbial "nail on the head" when it comes to GPUs, Artificial Intelligence, and Machine Learning:

If your neural network involves tons of calculations involving many hundreds of thousands of parameters, you might want to consider investing in a GPU.

As a general rule, GPUs are a safer bet for fast machine learning because, at its heart, data science model training consists of simple matrix math calculations, the speed of which may be greatly enhanced if the computations are carried out in parallel.