Explainable AI: Transparency Important to Fraud Detection

- While effective, the AI "black box" needs to be uncovered

- A closed environment invites errors and suspicion

- Explainable AI provides a solution

In a recent blog post from Brighterion -- a Mastercard company (founded in 2000 and acquired by Mastercard in 2017) that provides real-time artificial intelligence technology to 74 out of the 100 of the largest U.S. banks and more than 2,000 companies worldwide -- takes on the challenge of bringing "transparency" to a topic that financial organizations and their customers are struggling to understand: How AI works.

From an FI's perspective, not only are they responsible for how the AI performs for their customers, there are a litany of compliance and regulatory factors that must be addressed such as the Equal Credit Opportunity Act (ECOA) in the U.S. and the Coordinated Plan on Artificial Intelligence in the EU. And, as AI adoption grows, model explainability will become increasingly important and result in new laws and regulations.

Image Source: Brighterion

From a customer perspective, Brighterion provides a real-world fraud scenario that is not all that uncommon:

It’s late at night and your customer has just left the ER with their sick child, prescription in hand. They visit the 24-hour pharmacy, wait for the prescription to be filled and use their credit card to pay. The transaction is declined. They know their payments are up to date, and the clerk says they’ll have to call their bank in the morning. They go home with a crying child and no medication. You get a call the next morning, asking “What went wrong?”

It could be several things, from suspected fraud, delayed payment processing or simply a false decline. Some issues are traceable, and others are not. This mysterious process is difficult to trust and even more difficult to explain to a customer. Customers often leave their banks without a clear answer, and some vow to change FIs.

As the article explains, the development work defines a black box as a solution where users know the inputs and the final output, but they have no idea what the process is to make the decision. It's only when a mistake occurs that the complexity is noticed.

Understanding the Problem: Closed Environments Invite Errors

AI models are developed using historical data to train it to predict events and score transactions based on past events. Brighterton explains that "once the model goes into production, it receives millions of data points that then interact in billions of ways, processing decisions faster than any team of humans could achieve."

The problem: Brilliant or not, this machine learning model is making these decisions in a closed environment, understood only by the team that built the model.

This challenge was cited as the second highest concern by 32% of financial executives responding to the 2021 LendIt annual survey on AI, after regulation and compliance.

This leaves not only the customers in the dark, wondering why this occurred, but also the customer services representatives that are interacting with the customers. Imagine being on the phone or at the bank's location with an angry customer, only able to provide the most basic of answers. This is a scenario that leaves both the customer and the banking representatives with a bad taste in their mouths.

Finding the Right Solution: Explainable AI

Fortunately, there is a solution.

Explainable AI, sometimes known as a “white box” model, lifts the veil off machine learning models by assigning reason codes to decisions and making them visible to users. Users can review these codes to both explain decisions and verify outcomes. For example, if an account manager or fraud investigator suspects several decisions exhibit similar bias, developers can alter the model to remove that inequity.

“Good explainable AI is simple to understand yet highly personalized for each given event,” Dhala says. “It must operate in a highly scalable environment processing potentially billions of events while satisfying the needs of the model custodians (developers) and the customers who are impacted. At the same time, the model must comply with regulatory and privacy requirements as per the use case and country.”

To ensure the integrity of the process, an essential component of building the model is privacy by design. Rather than reviewing a model after development, systems engineers consider and incorporate privacy at each stage of design and development. So, while reason outcomes are highly personalized, customers’ privacy is proactively protected and embedded, set as the system default.

The key to the "white box" and AI transparency? Dhala says good model governance is the answer.

This overarching umbrella creates the environment for how an organization regulates access, puts policies in place and tracks the activities and outputs for AI models. Good model governance reduces risk in case of an audit for compliance and creates the framework for ethical, transparent AI in banking that eliminates bias.

“It’s important that you don’t cause or make decisions based on discriminatory factors,” he says. “Do significant reviews and follow guidelines to ensure no sensitive data is used in the model, such as zip code, gender, or age.”

Applying Explainable AI to Check Fraud Detection

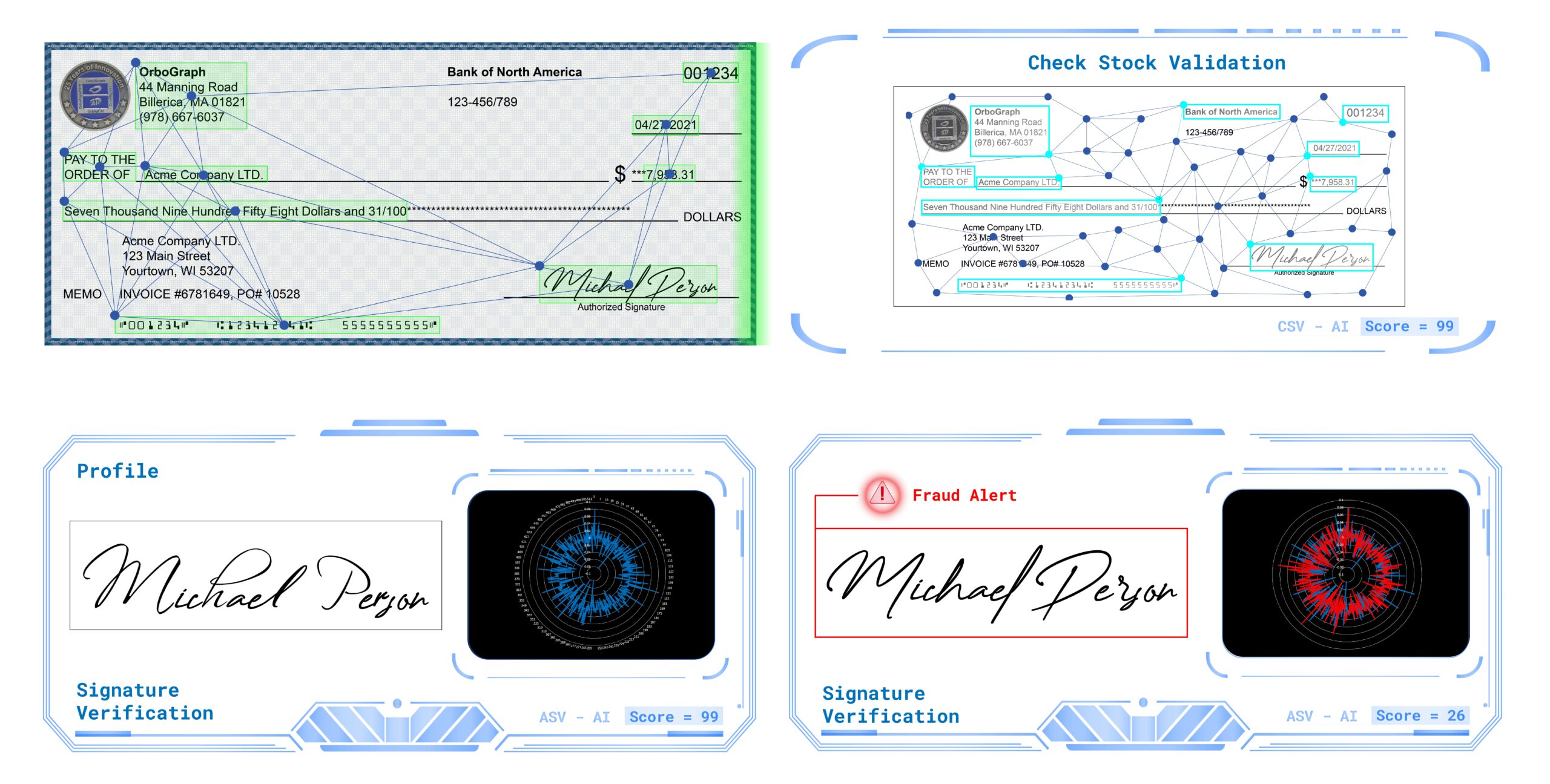

As the industry continues its deployment of AI technologies for check fraud detection, it's becoming increasing more important for both the banks and their customers to understand why transactions are being flagged as fraudulent. For transactional-based fraud detection, fraud analysts need to understand what behavior is occurring within the account that is being flagged. This will help them provide feedback on the models and/or provide better clarity to communicate with customers on what fraudulent activity is occurring.

On the flipside, image-based check fraud detection is already utilizing an explainable AI. As the technology is interrogating and analyzing the image of checks, the system is comparing the items to previously cleared and flagged checks, while also extracting data and analyzing the information from the checks for fraud. This is applied for check stock validation, along with signature verification. What's more important is that the technology is able to provide transparency to fraud analysts, including reasons why an item was rejected or flagged for review. This is reflected within the scoring on fraud review platforms.

This type of explainable AI enables fraud analysts to understand what the AI analyzed and the reason behind flagging the item, building a stronger trust in the system and the ability to communicate to internal teams with feedback and provide an explanation to their customers -- a win-win!