Explainable AI (XAI): Challenges & How to Overcome Them

Explainable Artificial Intelligence (XAI) has become a critical requirement as AI systems are increasingly used in high-stakes decisions across finance, healthcare, government, and enterprise operations. While modern machine learning models deliver impressive accuracy, many operate as black boxes, making it difficult to understand how decisions are made.

This lack of transparency raises concerns around trust, compliance, fairness, and accountability. Explainable AI addresses these concerns by providing insights into how AI models generate outputs, helping organizations deploy AI responsibly and effectively. Additionally, XAI improves model governance processes within organizations.

What is Explainable AI

Explainable AI (XAI) refers to a set of methods and techniques that allow humans to understand, interpret, and trust the outputs of artificial intelligence systems. Unlike opaque machine learning models, XAI focuses on making AI decisions transparent, auditable, and understandable to stakeholders.

XAI is especially important in regulated industries and use cases where AI decisions impact individuals, such as credit scoring, medical diagnosis, hiring, and autonomous systems.

Why Explainable AI Is Important

Explainable AI plays a vital role in modern AI governance and deployment:

- Builds trust with users and stakeholders

- Supports regulatory compliance (e.g., AI governance and auditability)

- Helps identify bias and fairness issues

- Improves model debugging and performance monitoring

- Enables human oversight and accountability

As AI systems grow more complex, explainability is no longer optional — it is a core requirement for a a more "responsible" AI.

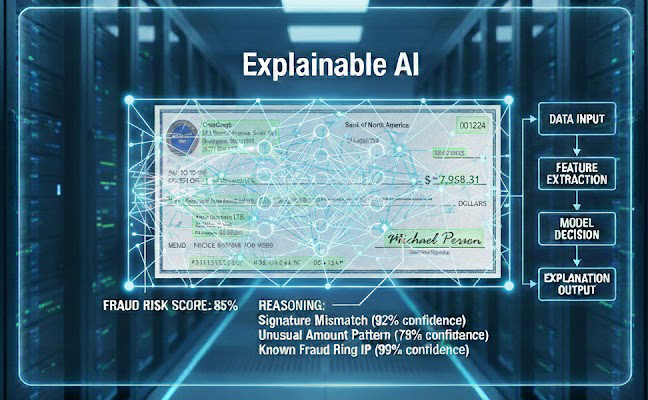

Explainable AI for Check Fraud Detection

Image-based check fraud detection is already utilizing Explainable AI. As the technology interrogates and analyzes check images, the system is comparing the items to previously cleared and flagged checks, while also extracting data and analyzing the information extracted from the checks for fraud.

This is crucial for check fraud detection because it provides transparency into complex machine learning and computer vision models that flag potentially fraudulent transactions. Banks and financial institutions rely on AI to analyze vast amounts of data—from check images and handwriting patterns to transaction histories and account behavior—to detect anomalies in real time. Without explainability, these AI models operate as “black boxes,” making it difficult for fraud analysts, regulators, and even customers to understand why a check was flagged. XAI ensures that every decision can be traced to specific features, such as signature mismatches, altered fields, or unusual deposit patterns, enabling analysts to validate alerts and reduce false positives that could otherwise disrupt legitimate transactions.

Beyond operational accuracy, explainable AI is critical for regulatory compliance and customer trust. Financial institutions must justify fraud detection decisions under banking regulations and internal audit requirements, and provide clear explanations in case of disputes. By making AI outputs interpretable, XAI allows fraud teams to explain decisions to stakeholders, improve model performance through iterative feedback, and ensure that automated systems align with legal, ethical, and operational standards. In essence, explainable AI transforms check fraud detection from a purely automated process into a transparent, accountable, and trustworthy system.

Key Challenges of Explainable AI

As noted in a blog post on Modernizing Omnichannel Check Fraud Detection, XAI enables transparency for check fraud detection, but there are still challenges to overcome.

How to Overcome Explainable AI Challenges

The Future of Explainable AI

Explainable AI enables organizations to deploy AI systems that are transparent, trustworthy, and accountable. While challenges such as complexity, trade-offs, and standardization remain, practical strategies and modern tools make it possible to overcome them.

By embedding explainability into AI design and governance, organizations can unlock the full value of AI while maintaining trust, compliance, and ethical responsibility.