AI and the Resurrection of Moore’s Law

Moore’s Law famously states that the number of transistors on a microchip doubles every two years, though the cost of computers is halved. A new essay on Medium.com explores how Moore’s Law had actually been “phasing out” for a period of time:

Moore’s Law, one of the fundamental laws indicating the exponential progress in the tech industry, especially electronic engineering, has been slowing down lately (since 2005, to be more precise), and has led many in this sector to believe this law to no longer hold true.

However, a new player emerged to bring Moore’s Law back to life:

That was, until Artificial Intelligence joined the arena! Since then, the game changed, and Moore’s law is slowly being revived.

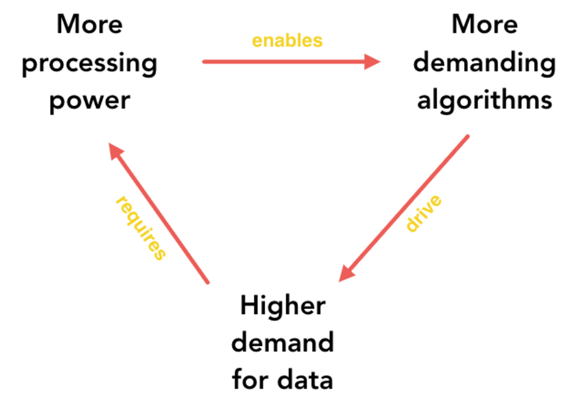

Fast forward to 2018: we have, and are still gathering, massive amounts of data. We have and are still developing more and more advanced algorithms (Generative Adversarial Networks and Capsule Networks stand as strong examples.) But do we have the hardware to crunch all those calculations within reasonable time? And if we do, can it be done without having all those GPUs cause another global warming on their own by literally heating up from all the processing? (See Azeem’s Diagram above)

Right there, Artificial Intelligence is imposing a constraint: keep the power constant or decrease it, but increase performance… doesn’t that sound a bit familiar to some scaling rule we have just seen? Exactly, by forcing the tech industry to come up with new processors which can perform more calculations per unit time, while maintaining power consumption and price, Artificial Intelligence is imposing Dennard Scaling again, and hence forcing Moore’s Law back to life!

Meanwhile, some predictions on the future of AI and ML in eight different sectors. We are, of course, concerned with AI and healthcare:

“When it comes to healthcare, there’s a lot machines can do to help the doctor. I don’t see a future where we actually don’t have doctors guiding, but a lot of the busy work doctors have to do is better done using artificial intelligence. If you think about a doctor’s career, thirty or forty years, the number of patients you can see during that time period is very limited. Many doctors are burnt out, overworked — it’s a serious issue. They also don’t have time to keep themselves up to date on the most recent research, treatment techniques, and advancements in medication. Machines can play a very important role here. Artificial intelligence can access a much larger set of patient data of how they were treated and what the outcomes were. You can imagine machines being in a much better position, because machines can start doing the busy work around the diagnosis and humans can actually interact with the patient and work with artificial intelligence to improve outcomes. That, in my mind, is super exciting.” – Serkan Kutan, CTO at Zocdoc

The applications for AI in healthcare is limitless. From performing the “busy work” around the diagnosis of a patients and improving their health, to observable and ongoing benefits to healthcare remittance and payment automation through solutions like Access EOB Conversion, we can expect growing demand for AI to revive the emphasis on hardware and chip efficiency — good news all around!

This blog contains forward-looking statements. For more information, click here.