GPU Cost vs. Performance: Assessing Financial Institutions’ Artificial Intelligence Needs

In the ever-evolving landscape of technology, demand for processing power has grown exponentially -- particularly in the realms of artificial intelligence (AI) and data analysis.

Central Processing Units (CPUs) have long been the backbone of computing -- particularly in banking. However, Graphics Processing Units (GPUs) have emerged as a game-changer, outshining CPUs in processing capabilities, especially for data-intensive tasks like AI.

Let's take a deeper dive into the superiority of GPUs over CPUs and explore how banks can affordably leverage GPUs for AI technology adoption.

The GPU Advantage

Traditionally, CPUs have been the workhorses of computing, designed to handle a wide range of tasks simultaneously. They are not, however, particularly optimized for tasks that require massive parallel processing, such as AI and deep learning.

GPUs, on the other hand, were originally designed to render complex graphics for video games. Due to their parallel architecture and thousands of cores, GPUs turned out to be remarkably efficient at handling repetitive calculations simultaneously.

This inherent parallelism makes GPUs exceptionally powerful for AI applications. When faced with tasks like image and speech recognition, natural language processing, and complex data analysis, GPUs and their massive parallel processing capability can't be matched. Their ability to perform numerous parallel calculations significantly accelerates processing time, resulting in faster and more accurate results.

As explained by Towards Data Science:

If your neural network has around 10, 100, or even 100,000 parameters. A computer would still be able to handle this in a matter of minutes, or even hours at the most.

But what if your neural network has more than 10 billion parameters? It would take years to train this kind of systems employing the traditional approach. Your computer would probably give up before you’re even one-tenth of the way.

A neural network that takes search input and predicts from 100 million outputs, or products, will typically end up with about 2,000 parameters per product. So you multiply those, and the final layer of the neural network is now 200 billion parameters. And I have not done anything sophisticated. I’m talking about a very, very dead simple neural network model. — a Ph.D. student at Rice University.

When we take into account check fraud detection and deposit automation, there are hundreds -- if not thousands -- of parameters required to achieve 95% detection of fraudulent items and 99%+ accuracy and read rates. Combine that with thousands -- or tens of thousands -- of items per day, and you can see how CPUs are not suitable for running artificial intelligence and deep learning, as major latency issues and delays would result.

In addition, the term AI seems to be a blanket term for many different types of functions. Simple vision or decision tree models, for example, can be called AI, and may be able to be run on existing CPU hardware. However, these are not nearly as complex as convolutional neural networks and deep neural networks, both of which need the parallelism of GPUs due to the parameter sizes mentioned above.

Overcoming Cost Concerns

Banks, like any other industry, are keen to embrace the capabilities of AI to enhance customer service, detect fraudulent activities, and streamline operations. However, the initial costs associated with integrating AI and GPUs might appear daunting. While it's true that high-end GPUs often come with hefty price tags, the market now offers a diverse range catering to various needs and budgets.

Not every AI application requires the most cutting-edge, top-of-the-line GPU. Depending on the complexity of the task or the number of items needing to be processed, mid-range or even entry-level GPUs can deliver impressive performance.

OrboGraph's OrbNet AI Lab has collaborated with hundreds of financial institutions to test GPUs of all prices ranges. While it's true that the most powerful GPUs, such as the NVIDIA A100 GPU, are the most expensive and often out-of-stock, these are often only needed during model training, not for model inference tasks. We've seen banks leveraging less powerful GPUs -- such as the NVIDIA T4, A2, and A10 GPUs -- meet their daily processing needs with great success.

The key is to identify the specific requirements of the AI applications and select a GPU that aligns with those needs. By selecting the right GPU for their requirements, banks can ensure their initial entry costs are affordable.

Efficiency and Scalability

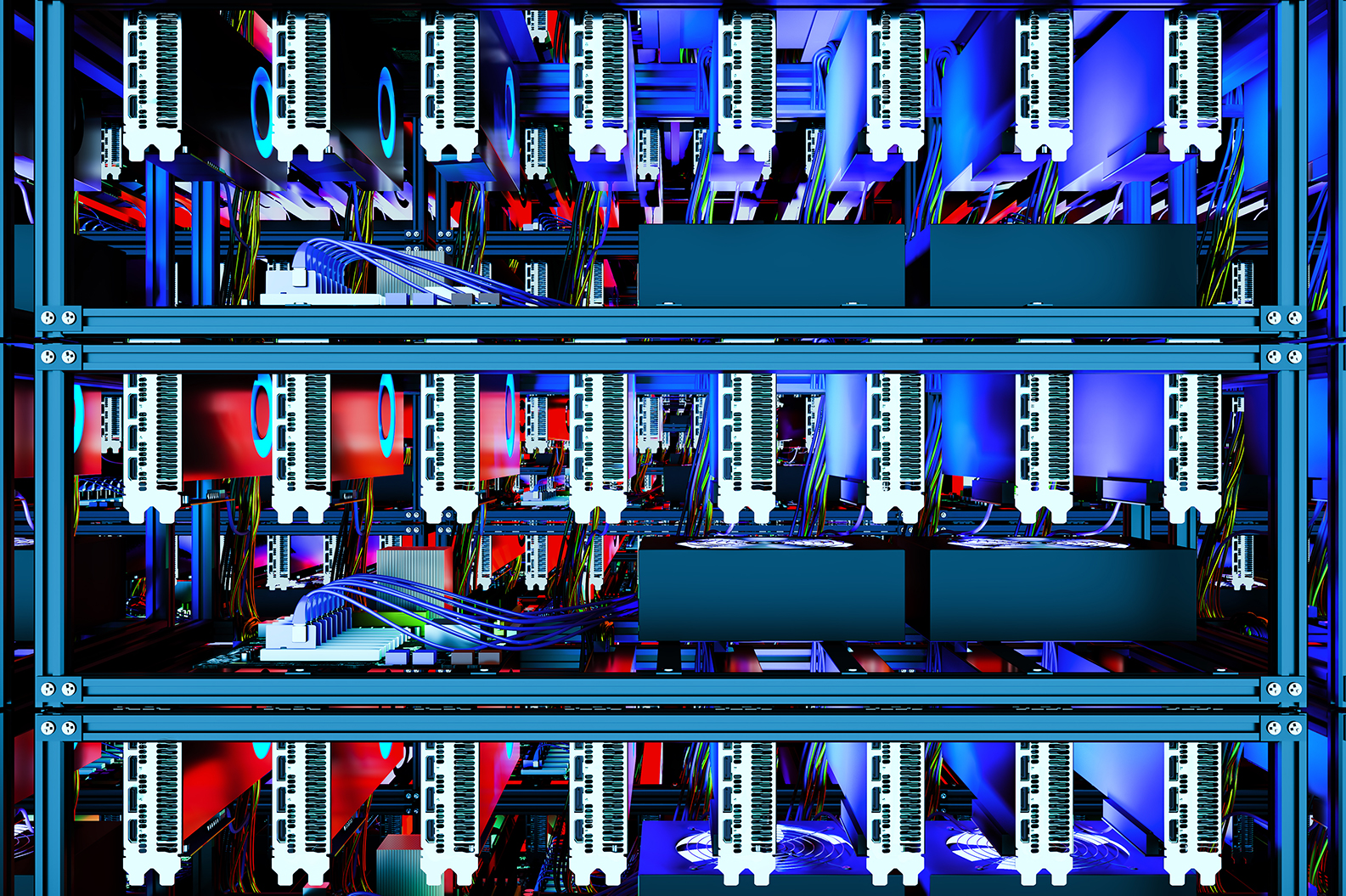

Another factor that banks should consider is efficiency and scalability of GPU setups. Unlike traditional CPU clusters that can be challenging to scale up efficiently, GPUs offer a more streamlined approach. Many AI frameworks are optimized to harness the power of multiple GPUs working in parallel, which means that banks can easily upgrade their AI infrastructure by adding more GPUs as needed.

This flexibility allows for cost-effective scalability, ensuring that banks can accommodate growing AI workloads without undergoing a complete infrastructure overhaul. In addition, all public cloud providers (such as AWS, GoogleCloud, and Microsoft Azure) offer these GPUs in highly scalable environments.

The Power of GPUs

As banks strive to remain competitive in a digital age characterized by data-driven insights, AI adoption (and specifically, complex CNN, DNN, and NLM models) becomes not just an option but a necessity.

GPUs, with their unparalleled processing capabilities, provide the ideal solution for banks to harness the potential of this advanced AI technology. The misconception that GPU implementation is cost-prohibitive can be dispelled by acknowledging the wide range of GPU options available on the market.

By strategically selecting GPUs that match the requirements of specific AI applications, banks can embark on a journey towards improved efficiency, customer experience, and operational excellence. In this dynamic landscape, the true power of GPUs lies not just in their processing prowess, but also their ability to empower banks to innovate and thrive in an AI-driven world.